Have you ever felt that doing things right is way too expensive? Well, you're not entirely wrong. The absolute best, bleeding-edge technology can significantly impact your wallet, and to be honest, it's often not worth it except for very few use cases.

But that's no excuse for doing things entirely wrong either! There is an acceptable middle ground where you can invest a little to get a lot. In this article, I'll show you a few things you can do in your AWS solution to improve your Reliability, Performance Efficiency, Security, Operational Excellence, or Cost Optimization, or perhaps more than one of those. They're not difficult, and they're not expensive. You'll spend very little time and money, and get a big impact out of this. They're low-cost!

Implementing MFA in AWS

AWS services involved: IAM or IAM Identity Center

Well-Architected pillars: Security

Description: It's pretty simple, always use MFA. MFA stands for Multi-Factor Authentication. Possible authentication factors are: Something that you possess (e.g. your phone), something that you know (e.g. your password), something that is inherent to you (e.g. your fingerprints), your location. A simple way to implement this is to require an MFA device such as Google Authenticator (an app for Android and iPhone) for logins. That way, if someone knows your password, they won't be able to log in without having access to your phone as well.

Picture:

Impact:

If someone knows your password, they won't be able to log in with just that; they'd need another factor.

It can be a bit annoying to pull your phone out of your pocket every time you want to log in, but it's a lot less annoying than dealing with a hijacked account.

How to implement: Go to IAM or IAM Identity Center and set up your MFA device for your user.

A few tips:

In IAM Identity Center, you can require that users have an MFA device set up.

In IAM Policies, you can require MFA for some actions, such as deleting resources.

Common mistakes to avoid:

- Not using MFA.

Master AWS with Real Solutions and Best Practices. Subscribe to the free newsletter Simple AWS. 3000 engineers and tech experts already have.

AWS Multi-account setup with single sign-on

AWS services involved: AWS Organizations, AWS IAM Identity Center

Well-Architected pillars: Operational Excellence, Security

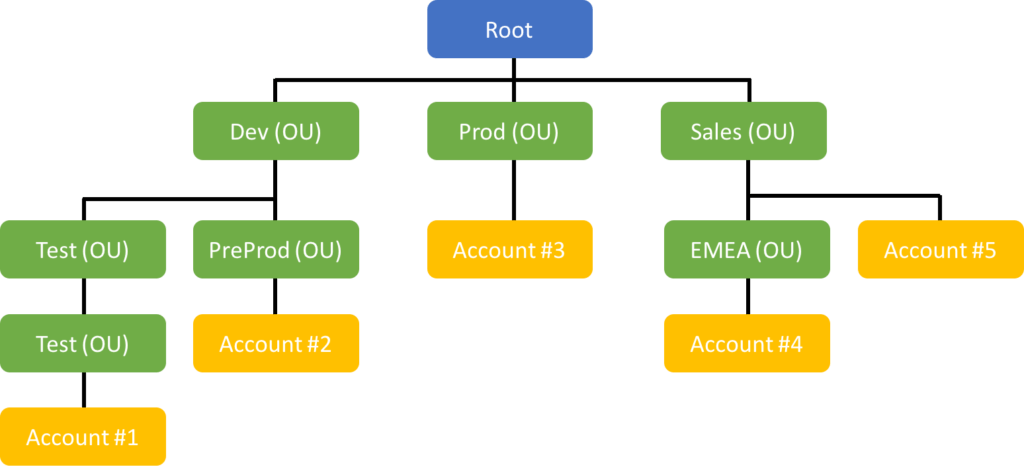

Description: Don't throw every AWS resource (EC2 instances for dev and prod, S3 for backups, etc.) into the same AWS account. Instead, create multiple accounts (as many as you need), each with a clearly defined purpose (e.g., dev environment for app 1), all grouped under the same Organization. Use consolidated billing so you only need to introduce your payment details once. Use AWS IAM Identity Center (formerly Single Sign-On) so you have just one username and password for all accounts. Limit who can access which accounts.

Picture:

Impact:

Workload separation and much easier work isolation

Ability to introduce Service Control Policies (SCPs) to enforce specific limitations across your entire organization

Better security and access control between accounts and environments

No long-lived access keys

How to implement: Create a new AWS account and use it to create an AWS Organization, enabling all features. Do this even if you already have an AWS account, and invite your existing AWS account into the Organization. Create all accounts that you need (you can create more later). Go to the IAM Identity Center service and set it up in your preferred region. If you want to use an existing identity provider such as Microsoft AD or Google Workspaces, connect it. Otherwise, create your SSO users directly in IAM Identity Center. Group users in groups, assign permissions to each group.

A few tips:

Organize your accounts in a hierarchy and apply policies at the root level or Organizational Units (OUs) to enforce specific limitations across your organization.

Use AWS IAM Identity Center to enable single sign-on across your AWS accounts, which simplifies managing access to multiple accounts.

You can use AWS CLI with IAM Identity Center, see here how to configure it.

You can create your own users in IAM Identity Center or you can connect your existing identity provider such as Okta, Microsoft AD or Google Workspaces (formerly GSuite).

Always use MFA.

There are a few accounts that are common in multi-account setups: Security (for security stuff such as access logs and related tools, appliances, etc), Shared Services (anything that's for multiple accounts, environments or apps, such as CI/CD), Log Archive (archiving logs), Backups (it's easier to isolate them and ensure nobody has write or delete access if they're in a separate account), Networking (shared network services, for example NAT Gateways shared with all accounts).

There's no cost for cross-account traffic, and resources can be shared with Resource Access Manager to, for example, deploy an EC2 instance in a subnet that belongs to another account. For other stuff you'll need IAM roles to be assumed by your services in another account and trust policies that permit that.

Common mistakes to avoid:

Overcomplicating the account structure - keep it simple and clear.

Not having a proper naming convention for your accounts.

When migrating from a single account to a multi-account setup, don't use your existing account as the Organization root. It's not a good practice to deploy resources on the root account, because it can't be limited by Service Control Policies. Instead, create a new account (you'll need to add payment details just this once), use it to create an AWS Organization and then invite your existing account

Don't grant everyone access to everything. Do your developers really need write access to the production account? Grant only what's necessary, and review the permissions and the necessities periodically.

Using AWS Service Control Policies

AWS services involved: AWS Organizations

Well-Architected pillars: Security

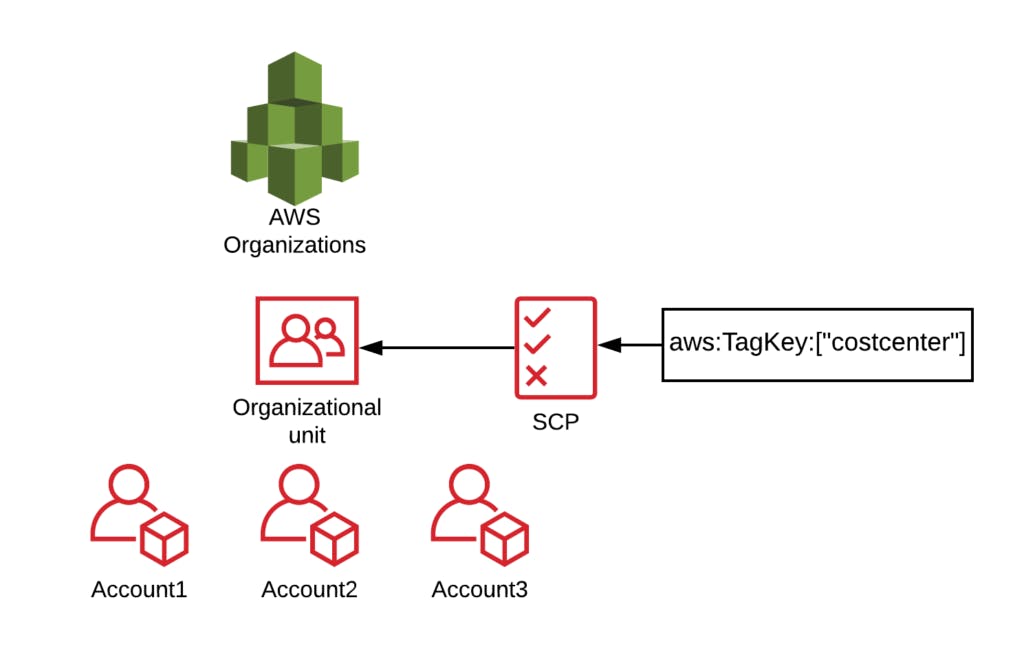

Description: Service Control Policies (SCPs) are like IAM Policies, but they apply to the whole account (or all accounts in the Organizational Unit), and instead of actually granting permissions, they specify the maximum permissions an account can have. SCPs require the account to be in an Organization (see above). They affect the root user and every IAM user or role, so they can be used to prevent any action in an account. Typically used as safeguards, e.g. deny all actions in a region you don't use, or only allow creating resources if they have a certain tag.

Picture:

Impact:

Guardrails around what people can do in an account

Ability to deny actions to every user, including the root user

How to implement: On the root account of your organization go to the Organizations service and make sure that all features are enabled for your organization. Go to Policies and Service Control Policies. Write a policy or copy one from the official example policies or other good sources. Then go to Accounts, choose an account or organizational unit, click on the Policies tab and attach your new policy to the account/OU.

A few tips:

Adding an explicit allow in an SCP doesn't automatically grant permissions to users, it just means the account can do those actions. An explicit deny will deny actions to the root user, every IAM user and every IAM role. That means an SCP that allows everything is the equivalent of not having SCPs (and when you enable all features in the organization that SCP is automatically created and attached)

SCPs are the only way to limit the root user. It's a good practice to deny all actions by the root user.

Another standard guardrail is to prevent the account from leaving the organization.

SCPs attached to an organizational unit are inherited by child organizational units and child accounts.

SCPs have a limit of 5120 bytes, and only 5 SCPs can be directly attached to an account or organizational unit. Inherited SCPs don't count towards this limit. To circumvent it, combine multiple effects into a single SCP, delete unnecessary characters such as spaces, and create organizational units to benefit from SCP inheritance.

Common mistakes to avoid:

If an action is not explicitly allowed by an SCP (for example you deleted the default SCP that allows all actions), then it's denied.

SCPs do not apply at all to the root account of the organization. For that reason, that account is more vulnerable than the other ones. The best practice is to use the root account as little as possible, and never deploy workloads there.

Allowing everyone access to the root account of the organization, so that they can modify SCPs, can help prevent mistakes and accidents, but won't protect you from an attacker.

Encrypting AWS EBS volumes

AWS services involved: AWS Key Management System, AWS Elastic Block Storage

Well-Architected pillars: Security

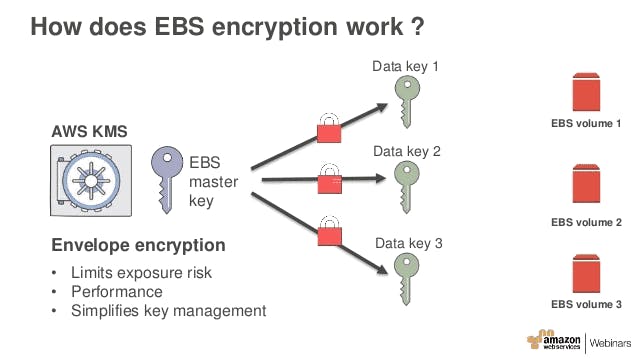

Description: Data at rest should be encrypted. When you create an EBS volume you have the option to encrypt it just by changing a dropdown from No to Yes. Simple enough, right? Well, you might forget. Let's be honest, you will forget, at some point. Luckily, you have the option to enable encryption by default, which means that all EBS volumes will always be encrypted. It's pretty simple to enable, but keep in mind that it's enabled on a per-region basis and that the KMS keys used for encryption are regional.

Picture:

Impact:

All your EBS volumes will be encrypted

All your EBS snapshots will also be encrypted, with the same KMS key used for the volume. This means that to share a snapshot with another account you will need to grant that account permissions to use the KMS key.

You won’t be able to share encrypted AMIs publicly, and any AMIs you share across accounts need access to your chosen KMS key.

You won’t be able to share snapshots / AMI if you encrypt with AWS managed CMK

The default encryption settings are per-region. As are the KMS keys.

Amazon EBS does not support asymmetric CMKs.

How to implement: Open the EC2 console. On the EC2 Dashboard scroll down to Account attributes and click EBS encryption. Click Manage, tick the box below Always encrypt new EBS volumes and click Update EBS Encryption. That's it.

A few tips:

You can use different KMS keys for different volumes or workloads, and you can use policies on those KMS keys to control who can access the volumes and their snapshots

When you copy a snapshot (in the same region or across regions) you can specify a new KMS key to encrypt it. You can do this manually, with an automated EBS snapshot script, or create automated EBS snapshots with Data Lifecycle Manager.

Common mistakes to avoid:

- EBS encryption by default might not play nice with AWS Server Migration Service. If your migration jobs are failing, try turning off EBS encryption by default. Instead, you can enable AMI encryption when you create the replication job.

Wrapping up

This was just a list of good practices that you can implement for a really small time and money investment, and get a big impact. Low cost, and best value for money/time.

It's by no means an exhaustive list. It could be a good starting point, but don't stop here. Keep finding the low hanging fruit, the simple things you can improve, and keep improving your cloud solution.

You probably don't need big and super expensive solutions. Don't overpay, don't overengineer. But don't settle.

Master AWS with Real Solutions and Best Practices.

Join over 3000 devs, tech leads, and experts learning real AWS solutions with the Simple AWS newsletter.

Analyze real-world scenarios

Learn the why behind every solution

Get best practices to scale and secure them

Simple AWS is free. Start mastering AWS!

If you'd like to know more about me, you can find me on LinkedIn or at www.guilleojeda.com